Life 3.0: Being Human in the Age of Artificial Intelligence

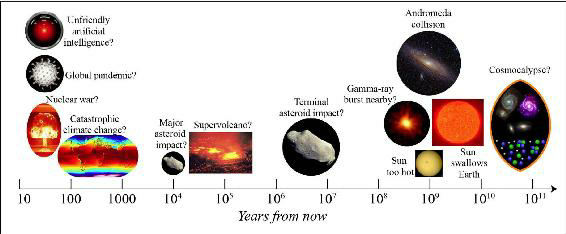

Life 3.0 discusses Artificial Intelligence (AI) and its impact on the future of life on Earth and beyond. The book discusses a variety of societal implications, what can be done to maximize the chances of a positive outcome, and potential futures for humanity, technology, and combinations thereof. How will Artificial Intelligence affect crime, war, justice, jobs, society and our very sense of being human? The rise of AI has the potential to transform our future more than any other technology–and there’s nobody better qualified or situated to explore that future than Max Tegmark, an MIT professor who’s helped mainstream research on how to keep AI beneficial. How can we grow our prosperity through automation without leaving people lacking income or purpose? What career advice should we give today’s kids? How can we make future AI systems more robust, so that they do what we want without crashing, malfunctioning or getting hacked? Should we fear an arms race in lethal autonomous weapons? Will machines eventually outsmart us at all tasks, replacing humans on the job market and perhaps altogether? Will AI help life flourish like never before or give us more power than we can handle? What sort of future do you want? This book empowers you to join what may be the most important conversation of our time. It doesn’t shy away from the full range of viewpoints or the most controversial issues–from superintelligence to meaning, consciousness and the ultimate physical limits on life in the cosmos

Premise

Artificial Intelligence is one of the most important yet controversial topics of our time. the new form of artificial life (Life 3.0) and how it will impact all human societal, cultural, political, economic, and many other aspects of life is a source of aspiration and fear for many people equally! The destiny of mankind if depends on any critical technology today it would be AI. In Life 3.0 Max Tegmark explores the concepts, ideas, conservatoires, and questions humanity will face as we move closer towards life 3.0. Artificial Intelligence is happening rapidly and will likely transform the future of mankind; thus humanity needs to understand the risks and benefits of AI and devise a plan for how future humanity will look like. Tegmark argues this conversation is not exclusive only to AI scientists, technologists, programmers, and researches but crucial for all humans, and invites all of us to join the conversation.

Tegmark starts the book with a fictitious yet possible story of how life 3.0 could arise. team (Omega) works secretly on an ultra-intelligence AI (Prometheus) to take over the world! Prometheus starts his journey as a baby with the learning abilities of anything. running on an Amazon array of EC2 clusters simulating hundreds of thousands of humans doing manual tasks on Amazon Mechanical Turk to pay for its computation resources. slowly Prometheus becomes smarter and redesigns its hardware makes a global invention in creative industries such as movie creation to compete with Netflix and control this industry. As Prometheus becomes smarter and the Omegas richer, together they take control of the world making lives of billions of people better, establishing a new world order, changing political regimes and taking down dictatorships over the globe! This plot is not as impressive as how the Omegas were able to keep Prometheus under control and preventing it from taking control of society and exterminating humanity.

Concepts and Ideas

Life 3.0

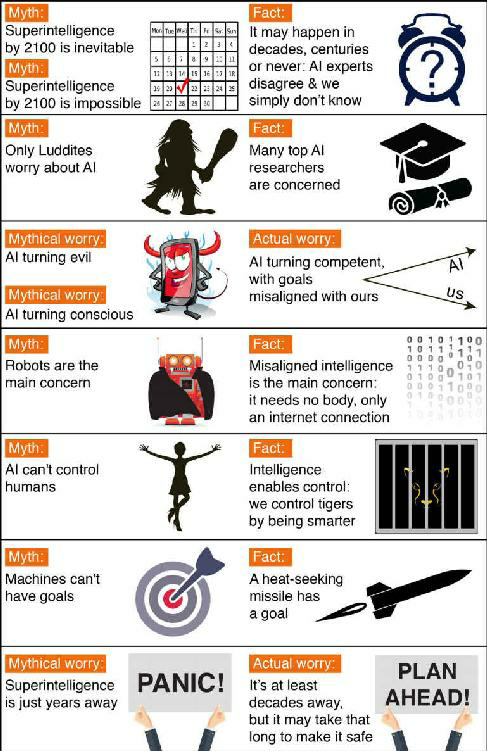

Tegmark starts by setting up the stage for the conversation by defining life as a process that can retain its complexity, and replicate it. life develop through three stages, 13.8 billion years ago after the Big Bang countless elementary particles appeared which gradually became the first atoms, stars, galaxies, and planets. The first form of life first appeared on earth about 4 billion years ago when a group of atoms became arranged in such a way that it allowed them to retain complex information and replicate its structure. Tegmark called the first stage Life 1.0 the biological stage, e.g. bacteria where both hardware and software evolve, simple behaviors are fully coded in DNA and can be changed through generations of evolution. Life 2.0 the cultural stage, e.g. Human Beings, where life can design its software through learning. language, skills, and knowledge preservation method enabled humans to change and redesign their behaviors (software) but can’t change their physical bodies (hardware) except through evolution; Life 3.0 the technological stage, where it can design its hardware as well, becoming the master of its destiny. Artificial Intelligence may enable mankind to launch Life 3.0 this century. There are 3 main camps in the AI research community debating how this can be accomplished. Techno-Skeptics doubting the idea of building superhuman AGI will happen in the next 100 years. Digital Utopians, very optimistic that AGI will happen in our lifetime and wholeheartedly welcoming Life 3.0 as a natural and desirable next steps in the cosmic evolution, and finally The Beneficial-AI movement also views AGI most likely will happen this century, but skeptical about good outcome, and a plan needs to be in place to ensure safe AI-research outcome. Tegmark draws a map for the interesting journey he will take us through Life 3.0 starting by dismissing the boring pseudo-controversies caused by misunderstandings, e.g. arguing about “life”, “intelligence”, or “consciousness” before ensuring an agreement on the meanings behind such words. afterward, he plots a common terms definition list from his perspective to guide the conversion in the book.

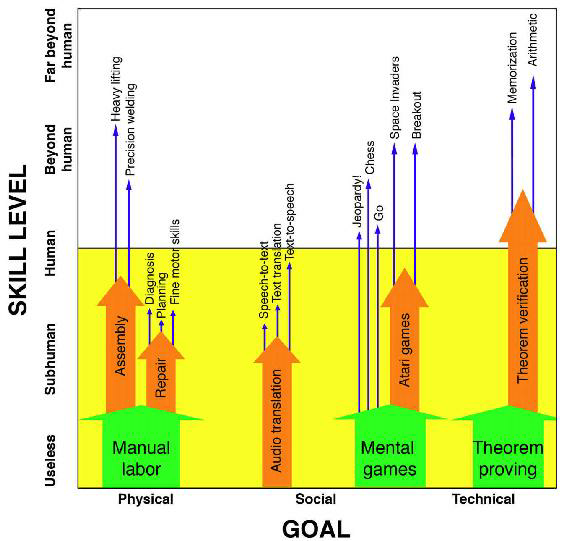

Intelligent Matter

What does it mean to say that a blob of matter is intelligent? What does it mean to say that an object can remember, computer, and learn?. Tegmark starts by tackling these difficult questions defining intelligence as the ability to accomplish complex goals, and can’t be measured by dingle IQ, only by ability spectrum across all goals. Human intelligence is remarkably broad not like today’s AI that tends to be narrow focusing on certain types of goals where it sometimes exceeds humans abilities within that domain in a narrow sense. for example machines far beyond human abilities in many accomplishing physical goals, and getting better everyday in social goals like speech-to-text, text translation, and text-to-speech and the recent years had many examples of narrow Artificial intelligence ranging from IBM’s deep blue dethroned chess champion Garry Kasparov in 1997 to Google DQN AI system DeepMind playing dozens of different vintage Atari games. Humans win hands-down today on breadth, where machines outperform us in a small but growing number of narrow domains; hence the holy grail of AI research today is to build “general AI” aka AGI maximally broad i.e. able to accomplish virtually any goal, including learning. The conventional wisdom among AI researchers is that intelligence is ultimately all about information and computation, not about flesh, blood or carbon atoms, which means its substrate-independent thus there is no apparent fundamental reason why machines can’t one day be at least as intelligent as us! Memory, computation, learning, and intelligence have an abstract intangible feel to them because they’re substrate-independent, taking on the life of their own that doesn’t depend on its underlying material. for example, any chunk of matter can be a substrate for memory as long as it has many different stable (long-lived) states to encode information until it’s needed. And any matter can be computronium (programmable matter) as long as it contains certain universal building blocks that can be combined to implement any function NAND gates and neurons are such examples of universal computational atoms. and a neural network is a powerful substrate for learning because by simply obeying the laws of physics it can rearrange itself to get better at implementing desired computations. In the spirit of Moore’s law, once a technology gets twice as powerful, it’s often used to build technology that is twice as powerful in turns, triggering a rapid cycle of doubling ability. and whenever the technology reaches the ceil of its exponential growth it gets replaced by even a better new one!

Opportunities and Challenges

What makes us different than other forms of life? and what makes us unique to each other? regardless of the answer to these very important questions, it’s clear that the rise of technology will gradually change them. the near-term AI progress has the potential to improve human lives and societies in myriad ways. Deep Reinforcement Learning Agents like Google DeepMind learning to play computer games in 2014 was a breakthrough in AI systems abilities, another defining moment for DeepMind AI system was AlphaGo winning five-game Go match against Lee Sedol (top player in the world). yet another astonishing progress for the AI system is in the area of natural language, The Google Brain team’s translate service in 2016 got a unique update to use deep recurrent neural networks was a drastic improvement over older service. making NLP one of the most rapidly advancing fields in AI. These promising early examples of AI systems raise questions on how its progress will impact us? everything we love about civilization is a product of human intelligence, if we amplify it with artificial intelligence we potentially will make life even better with major improvements like reducing accidents, diseases, injustice, war, poverty. but when we allow real-world systems to be controlled by AI, it will be crucial to making AI systems (particularly weapon systems) more robust by relying less on a trial-and-error approach to safety engineering, becoming more proactive than reactive. The main areas of AI-safety research include solving difficult problems of verification, validation, security, and control. Leading AI researchers and roboticists called for an internal treaty banning certain kinds of autonomous weapons, to avoid an out-of-control arms race between governments to build assassination machines. AI systems can also make current legal systems more fair and efficient, transparent and unbiased Robojudges could ensure for the first time in human history that everyone becomes truly equal under the law. AI also will transfer transportation where self-driving cars can eliminate at least 90% of road deaths! in the health domain, a 2015 Dutch study showed that computer diagnosis of prostate cancer using MRI was as good as that of human radiologists, and 2016 Stanford study showed that AI systems could diagnose lung cancer using microscope images even better than human pathologists. These and many more examples should make you worry that not before long intelligent machines will replace us altogether, and replace us on the job market. This doesn’t have to be a bad news society can redistribute a fraction of the AI-created wealth to achieve a human utopia! but of course, many economists argue this will greatly increase inequalities in society. but others argue alow-employment society should be able to flourish not only financially, with people getting their sense of purpose from activities other than their jobs.

Intelligence Explosion

Can AI take over the world, or enable humans to do so?, the road map towards AGI-powered world takeover requires 3 steps build human-level AGI, Use this AGI to create superintelligence, use or unleash this superintelligence to take over the world. human-level AGI may trigger an intelligence explosion, the vital question at this point will be can we control this human-level AGI or not?! whoever posses this AGI will take off the work in a matter of years. and if humans fail to control the intelligence explosion, the AI itself may take over the world even faster! with superhuman technology, the step from the perfect surveillance state to the perfect police state would be minute. the wishful thinking of AGI will be loyal to its creator “humans” is not logical, and is not a grantee it will commit to its design purpose. our DNA gave us a goal of having sex because it “wants” us to reproduce, but now as we humans have understood the situation, many of us choose to use birth control! But how would this AGI break out from its human-made cage?. there are many methods and scenarios such superintelligence can use to deceive us to break free. by deception, hacking, recruiting help in the physical world, or other ways we not imaginable by us today. The history of life shows it self-organizing into an ever more complex hierarchy shaped by collaboration, competition, and control. Superintelligence is likely to enable coordination on ever-larger cosmic scales, but it’s unclear whether it will ultimately lead to more totalitarian top-down control or more individual empowerment. The question of how superintelligent future will be control is fascinatingly complex, and we don’t know the answer yet. but some like Ray Kurzweil in “Singularity is Near” argues that natural continuation of nanobots, intelligent biofeedback systems, and other technology to replace first our digestive and endocrine systems, our blood, and our hearts by early 2030s, and then move on to upgrading our skeletons, skin, brains, and the rest of our bodies during the next two decades! predicting that cyborgs and uploads of our brains are very plausible soon, but arguably not the fastest route to advanced machine intelligence. yet some leading thinkers guess that the first human-level AGI will be upload and that this is how the path towards superintelligence will begin!

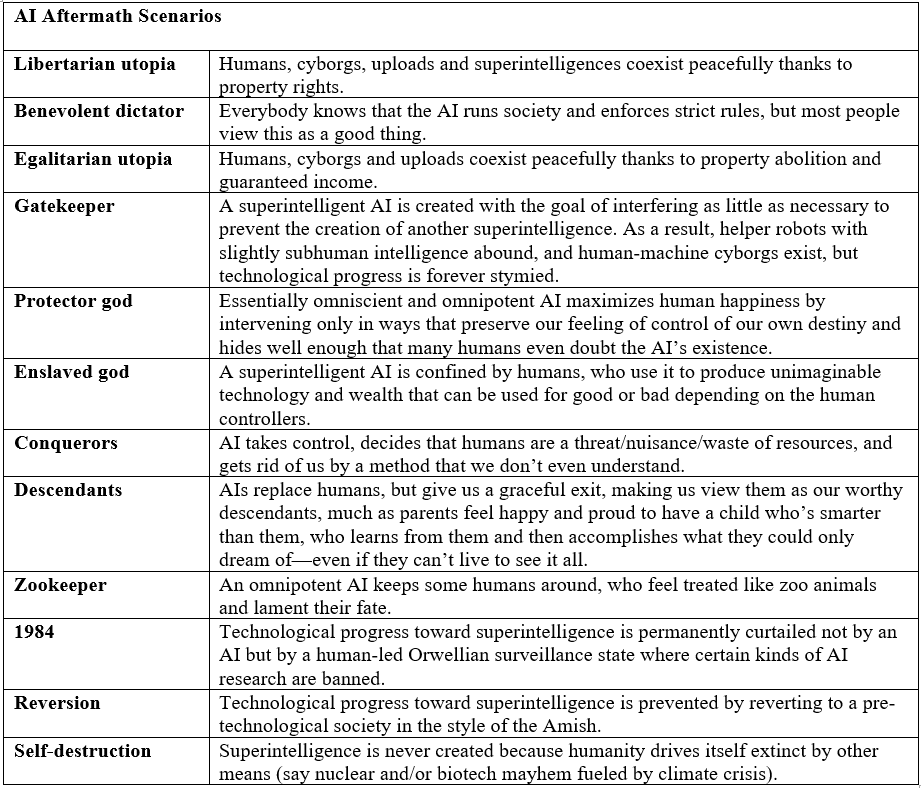

The Aftermath

The race towards AGI is on, and no one knows how it will unfold. but it doesn’t mean we shouldn’t think about the different scenarios it will occur, and how we want the aftermath world to be> do we want a future with superintelligence? do we want human to still exist, replaced, or cyborgized and/or uploaded? do we want AGI to be conscious or not? do we want life to spreading out in the cosmos? and many other future existential questions. The scenarios of aftermath explosion of intelligence include the Peaceful coexistence of Superintelligence and humans may be because humans Enslaved AI and use it to produce unimaginable technology and wealth. or maybe because it’s a “friendly AI” that is driven by a “libertarian-utopia” based on property rights, or a protector god-like AI that will maximize human happiness by intervening only in ways that preserve our feeling of control of our destiny. or a zookeeper omnipotent AI the keeps some humans around just like zoo animals for leisure or other purposes. But Superintelligence can also be prevented by AI gatekeepers or by humans to deliberately prevent the creation of another superintelligence, robots with slightly subhuman intelligence, and human-machine cyborgs exist but technological progress is forever stymied. and finally, humanity can go extinct and get replaced by AIs that takes control and conqueror human society after it decides that humans are a threat or waste of resources. or the AI can become our descendant giving us a graceful exit, making us view it as our worthy descendants. or superintelligence is never created because humanity drives itself extinct by other means (nuclear, or biotech mayhem). There is no consensus on which, if any of these scenarios are desirable and all involve objectionable elements.

Verdict

The author offered a balanced view on the future of Artificial Intelligence and Humankind, between the dreamers and the cynics. Life 3.0 takes a realistic approach to the very real problem of AGI. The book is very accessible to the general audience for the most part. Tegmark elegantly describes the problem and the way out, with an action plan and movement he started already. This book will not only leave you informed about the criticality of the issue and the ticking bombs currently in research labs, but it will also engage you in the most important conversation of our generation!

About the Author

Max Tegmark is an MIT professor who loves thinking about life’s big questions and has authored 2 books and more than 200 technical papers on topics from cosmology to artificial intelligence. He is known as “Mad Max” for his unorthodox ideas and passion for adventure. He is also president of the Future of Life Institute, which aims to ensure that we develop not only technology but also the wisdom required to use it beneficially. Find more in The Universes of Max Tegmark website.

Related Resources

- “The AI Revolution: Our Immortality or Extinction?” Wait But Why (January 27, 2015), at http://waitbutwhy.com/2015/01/artificial-intelligence-revolution-2.html.

- This open letter, “Research Priorities for Robust and Beneficial Artificial Intelligence,” can be found at http://futureoflife.org/ai-open-letter/.

- Example of classic robot alarmism in the media: Ellie Zolfagharifard, “Artificial Intelligence ‘Could Be the Worst Thing to Happen to Humanity,’ ” Daily Mail, May 2, 2014; http://tinyurl.com/hawkingbots.

- Notes on the origin of the term AGI: http://wp.goertzel.org/who-coined-the-term-agi.

- Hans Moravec, “When Will Computer Hardware Match the Human Brain?” Journal of Evolution and Technology (1998), vol. 1.

- In the figure showing computing power versus year, the pre-2011 data is from Ray Kurzweil’s book How to Create a Mind, and subsequent data is computed from the references in https://en.wikipedia.org/wiki/FLOPS.

- Quantum computing pioneer David Deutsch describes how he views quantum computation as evidence of parallel universes in his The Fabric of Reality: The Science of Parallel Universes—and Its Implications (London: Allen Lane, 1997). If you want my take on quantum parallel universes as the third of four multiverse levels, you’ll find it in my previous book: Max Tegmark, Our Mathematical Universe: My Quest for the Ultimate Nature of Reality (New York: Knopf, 2014).

- Watch “Google DeepMind’s Deep Q-learning playing Atari Breakout” on YouTube at https://tinyurl.com/atariai.

- See Volodymyr Mnih et al., “Human-Level Control Through Deep Reinforcement Learning,” Nature 518 (February 26, 2015): 529–533, available online at http://tinyurl.com/ataripaper.

- Here’s a video of the Big Dog robot in action: https://www.youtube.com/watch?v=W1czBcnX1Ww.

- For reactions to the sensationally creative line 5 move by AlphaGO, see “Move 37!! Lee Sedol vs AlphaGo Match 2,” at https://www.youtube.com/watch?v=JNrXgpSEEIE.

- Demis Hassabis describing reactions to AlphaGo from human Go players: https://www.youtube.com/watch?v=otJKzpNWZT4.

- For recent improvements in machine translation, see Gideon Lewis-Kraus, “The Great A.I. Awakening,” New York Times Magazine (December 14, 2016), available online at http://www.nytimes.com/2016/12/14/magazine/the-great-ai-awakening.html. GoogleTranslate is available here at https://translate.google.com.

- Winograd Schema Challenge competition: http://tinyurl.com/winogradchallenge.

- Ariane 5 explosion video: https://www.youtube.com/watch?v=qnHn8W1Em6E.

- Ariane 5 Flight 501 Failure report by the inquiry board: http://tinyurl.com/arianeflop.

- NASA’s Mars Climate Orbiter Mishap Investigation Board Phase I report http://tinyurl.com/marsflop.

- The most detailed and consistent account of what caused the Mariner 1 Venus mission failure was incorrect hand-transcription of a single mathematical symbol (a missing overbar): http://tinyurl.com/marinerflop.

- A detailed description of the failure of the Soviet Phobos 1 Mars mission can be found in Wesley T. Huntress Jr. and Mikhail Ya. Marov, Soviet Robots in the Solar System (New York: Praxis Publishing, 2011), p. 308.

- How unverified software cost Knight Capital $440 million in 45 minutes: http://tinyurl.com/knightflop1 and http://tinyurl.com/knightflop2.

- U.S. government report on the Wall Street “flash crash”: “Findings Regarding the Market Events of May 6, 2010” (September 30, 2010), at http://tinyurl.com/flashcrashreport.

- 3-D printing of buildings (https://www.youtube.com/watch?v=SObzNdyRTBs), micromechanical devices (http://tinyurl.com/tinyprinter) and many things in between (https://www.youtube.com/watch?v=xVU4FLrsPXs).

- Global map of community-based fab labs: https://www.fablabs.io/labs/map.

- News article about Robert Williams being killed by an industrial robot: http://tinyurl.com/williamsaccident.

- News article about Kenji Urada being killed by an industrial robot: http://tinyurl.com/uradaaccident.

- News article about Volkswagen worker being killed by an industrial robot: http://tinyurl.com/baunatalaccident.

- U.S. government report on worker fatalities: https://www.osha.gov/dep/fatcat/dep_fatcat.html.

- Car accident fatality statistics: http://tinyurl.com/roadsafety2 and http://tinyurl.com/roadsafety3.

- On the first Tesla autopilot fatality, see Andrew Buncombe, “Tesla Crash: Driver Who Died While on Autopilot Mode ‘Was Watching Harry Potter,’ ” Independent (July 1, 2016), http://tinyurl.com/teslacrashstory. For the report of the Office of Defects Investigation of the U.S. National Highway Traffic Safety Administration, see http://tinyurl.com/teslacrashreport.

- For more about the Herald of Free Enterprise disaster, see R. B. Whittingham, The Blame Machine: Why Human Error Causes Accidents (Oxford, UK: Elsevier, 2004).

- Documentary about the Air France 447 crash: https://www.youtube.com/watch?v=dpPkp8OGQFI; accident report: http://tinyurl.com/af447report; outside analysis: http://tinyurl.com/thomsonarticle.

- The official report on the 2003 U.S.-Canadian blackout: http://tinyurl.com/uscanadablackout.

- Final report of the President’s Commission on the Accident at Three Mile Island: http://www.threemileisland.org/downloads/188.pdf.

- Dutch study showing how AI can rival human radiologists at an MRI-based diagnosis of prostate cancer: http://tinyurl.com/prostate-ai.

- Stanford study showing how AI can best human pathologists at lung cancer diagnosis: http://tinyurl.com/lungcancer-ai.

- Investigation of the Therac-25 radiation therapy accidents: http://tinyurl.com/theracfailure.

- Report on lethal radiation overdoses in Panama caused by confusing user interface: http://tinyurl.com/cobalt60accident.

- Study of adverse events in robotic surgery: https://arxiv.org/abs/1507.03518.

- Article on several deaths from bad hospital care: http://tinyurl.com/medaccidents.

- Yahoo set a new standard for “big hack” when announcing a billion(!) of their user accounts had been breached: https://www.wired.com/2016/12/yahoo-hack-billion-users/.

- New York Times article on acquittal and later conviction of KKK murderer: http://tinyurl.com/kkkacquittal.

- The Danziger et al. 2011 study (http://www.pnas.org/content/108/17/6889.full), arguing that hungry judges are harsher, was criticized as flawed by Keren Weinshall-Margela and John Shapard (http://www.pnas.org/content/108/42/E833.full), but Danziger et al. insist that their claims remain valid (http://www.pnas.org/content/108/42/E834.full).

- Pro Publica report on racial bias in recidivism-prediction software: http://tinyurl.com/robojudge.

- Use of fMRI and other brain-scanning techniques as evidence in trials is highly controversial, as is the reliability of such techniques, although many teams claim accuracies better than 90%: http://journal.frontiersin.org/article/10.3389/fpsyg.2015.00709/full.

- PBS made the movie The Man Who Saved the World about the incident where Vasili Arkhipov single-handedly prevented a Soviet nuclear strike: https://www.youtube.com/watch?v=4VPY2SgyG5w.

- The story of how Stanislav Petrov dismissed warnings of a U.S. nuclear attack as a false alarm was turned into the movie The Man Who Saved the World (not to be confused with the movie by the same title in the previous note), and Petrov was honored at the United Nations and given the World Citizen Award: https://www.youtube.com/watch?v=IncSjwWQHMo.

- Open letter from AI and robotics researchers about autonomous weapons: http://futureoflife.org/open-letter-autonomous-weapons/.

- A U.S. official seemingly wanting a military AI arms race: http://tinyurl.com/workquote.

- Study of wealth inequality in the United States since 1913: http://gabriel-zucman.eu/files/SaezZucman2015.pdf.

- Oxfam report on global wealth inequality: http://tinyurl.com/oxfam2017.

- For a great introduction to the hypothesis of technology-driven inequality, see Erik Brynjolfsson and Andrew McAfee, The Second Machine Age: Work, Progress, and Prosperity in a Time of Brilliant Technologies (New York: Norton, 2014).

- Article in The Atlantic about falling wages for the less educated: http://tinyurl.com/wagedrop.

- The data plotted are taken from Facundo Alvaredo, Anthony B. Atkinson, Thomas Piketty, Emmanuel Saez, and Gabriel Zucman, The World Wealth and Income Database (http://www.wid.world), including capital gains.

- Presentation by James Manyika showing income shifting from labor to capital: http://futureoflife.org/data/PDF/james_manyika.pdf.

- Forecasts about future job automation from Oxford University (http://tinyurl.com/automationoxford) and McKinsey (http://tinyurl.com/automationmckinsey).

- Video of robotic chef: https://www.youtube.com/watch?v=fE6i2OO6Y6s.

- Marin Soljačić explored these options at the 2016 workshop Computers Gone Wild: Impact and Implications of Developments in Artificial Intelligence on Society: http://futureoflife.org/2016/05/06/computers-gone-wild/.

- Andrew McAfee’s suggestions for how to create more good jobs: http://futureoflife.org/data/PDF/andrew_mcafee.pdf.

- In addition to many academic articles arguing that “this time is different” for technological unemployment, the video “Humans Need Not Apply” succinctly makes the same point: https://www.youtube.com/watch?v=7Pq-S557XQU.

- U.S. Bureau of Labor Statistics: http://www.bls.gov/cps/cpsaat11.htm.

- Argument that “this time is different” for technological unemployment: Federico Pistono, Robots Will Steal Your Job, but That’s OK (2012), http://robotswillstealyourjob.com.

- Changes in the U.S. horse population: http://tinyurl.com/horsedecline.

- Meta-analysis showing how unemployment affects well-being: Maike Luhmann et al., “Subjective Well-Being and Adaptation to Life Events: A Meta-Analysis,” Journal of Personality and Social Psychology 102, no. 3 (2012): 592; available online at https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3289759.

- Studies of what boosts people’s sense of well-being: Angela Duckworth, Tracy Steen, and Martin Seligman, “Positive Psychology in Clinical Practice,” Annual Review of Clinical Psychology 1 (2005): 629–651, online at http://tinyurl.com/wellbeingduckworth. Weiting Ng and Ed Diener, “What Matters to the Rich and the Poor? Subjective Well-Being, Financial Satisfaction, and Postmaterialist Needs Across the World,” Journal of Personality and Social Psychology 107, no. 2 (2014): 326, online at http://psycnet.apa.org/journals/psp/107/2/326. Kirsten Weir, “More than Job Satisfaction,” Monitor on Psychology 44, no. 11 (December 2013), online at http://www.apa.org/monitor/2013/12/job-satisfaction.aspx.

- Multiplying together about 1011 neurons, about 104 connections per neuron and about one (100) firing per neuron each second might suggest that about 1015 FLOPS (1 petaFLOPS) suffice to simulate a human brain, but there are many poorly understood complications, including the detailed timing of firings and the question of whether small parts of neurons and synapses need to be simulated too. IBM computer scientist Dharmendra Modha has estimated that 38 petaFLOPS are required (http://tinyurl.com/javln43), while neuroscientist Henry Markram has estimated that one needs about 1,000 petaFLOPS (http://tinyurl.com/6rpohqv). AI researchers Katja Grace and Paul Christiano have argued that the most costly aspect of brain simulation is not computation but communication and that this too is a task in the ballpark of what the best current supercomputers can do: http://aiimpacts.org/about.

- For an interesting estimate of the computational power of the human brain: Hans Moravec “When Will Computer Hardware Match the Human Brain?” Journal of Evolution and Technology, vol. 1 (1998).

- For a video of the first mechanical bird, see Markus Fischer, “A Robot That Flies like a Bird,” TED Talk, July 2011, at https://www.ted.com/talks/a_robot_that_flies_like_a_bird.

- Ray Kurzweil, The Singularity Is Near (New York: Viking Press, 2005).

- Ben Goertzel’s “Nanny AI” scenario is described here: https://wiki.lesswrong.com/wiki/Nanny_AI.

- For a discussion about the relationship between machines and humans, and whether machines are our slaves, see Benjamin Wallace-Wells, “Boyhood,” New York magazine (May 20, 2015), online at http://tinyurl.com/aislaves.

- Mind crime is discussed in Nick Bostrom’s book Superintelligence and more technical detail in this recent paper: Nick Bostrom, Allan Dafoe, and Carrick Flynn, “Policy Desiderata in the Development of Machine Superintelligence” (2016), http://www.nickbostrom.com/papers/aipolicy.pdf.

- Matthew Schofield, “Memories of Stasi Color Germans’ View of U.S. Surveillance Programs,”McClatchy DC Bureau (June 26, 2013), online at http://www.mcclatchydc.com/news/nation-world/national/article24750439.html.

- For thought-provoking reflections on how people can be incentivized to create outcomes that nobody wants, I recommend “Meditations on Moloch,” http://slatestarcodex.com/2014/07/30/meditations-on-moloch.

- For an interactive timeline of close calls when nuclear war might have started by accident, see Future of Life Institute, “Accidental Nuclear War: A Timeline of Close Calls,” online at http://tinyurl.com/nukeoops.

- For compensation payments made to U.S. nuclear testing victims, see the U.S. Department of Justice website, “Awards to Date 4/24/2015,” at https://www.justice.gov/civil/awards-date-04242015.

- Report of the Commission to Assess the Threat to the United States from Electromagnetic Pulse (EMP) Attack, April 2008, available online at http://www.empcommission.org/docs/A2473-EMP_Commission-7MB.pdf.

- Independent research by both U.S. and Soviet scientists alerted Reagan and Gorbachev to the risk of nuclear winter: P. J. Crutzen and J. W. Birks, “The Atmosphere After a Nuclear War: Twilight at Noon,” Ambio 11, no. 2/3 (1982): 114–125. R. P. Turco, O. B. Toon, T. P. Ackerman, J. B. Pollack, and C. Sagan, “Nuclear Winter: Global Consequences of Multiple Nuclear Explosions,” Science 222 (1983): 1283–1292. V. V. Aleksandrov and G. L. Stenchikov, “On the Modeling of the Climatic Consequences of the Nuclear War,” Proceeding on Applied Mathematics (Moscow: Computing Centre of the USSR Academy of Sciences, 1983), 21. A. Robock, “Snow and Ice Feedbacks Prolong Effects of Nuclear Winter,” Nature 310 (1984): 667–670.

- Calculation of climate effects of global nuclear war: A. Robock, L. Oman and L. Stenchikov, “Nuclear Winter Revisited with a Modern Climate Model and Current Nuclear Arsenals: Still Catastrophic Consequences,” Journal of Geophysical Research 12 (2007): D13107.

Leave a Comment